Big Data, Load Testing, and Software Development—on One Smart Grid

When many people think of big data and what its capabilities are, most think of it on pretty simplistic marketing and sales enhancing levels. But it’s also beginning to be used on a much larger scale—large as in vast energy conservation and maybe even large as in planet saving.

Money is being poured into smart grid software and analytics services. As Megan Treacy at Treehugger recently pointed out, nearly a billion and a half dollars will be invested by US utility companies in the emerging technology in just the next seven years.

The ability of utility companies to exponentially increase and optimize the use of available energy levels isn’t the only factor driving their recent investments. According to a Greentech Media report that Treacy quotes, "The return on investment for these services is expected to be about six times what is spent in that time frame."

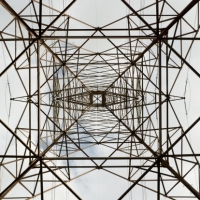

So, how do smart grids do what they do, and why has it taken this long to develop them? One factor is the recent emergence of the cloud. Smart grids are able to collect vast quantities of energy use patterns from units as small as an individual home to those as large as an entire metropolitan city, and the storage of that data often requires the use of massive remote data centers.

The immense amount of data is then aggregated and analyzed by a number of analytics companies springing up left and right due to this technology’s full-scale adoption just on the horizon.

With efficiency-driven analytical capabilities, smart grids and their software open up worlds of load testing opportunities previously never used in the energy sector. Duke University’s renowned medical campus is comprised of ninety buildings powered by fifty generators that all sit on more than 200 acres. They've employed Blue Pillar for their "expertise in automated energy asset management systems for distributed energy resources."

Wikipedia helps explain just how Duke and others use the aggregative abilities and communication between generators that share a grid to make sure that power can be delivered where it’s needed most and not wasted on areas that can run on far less.

Using mathematical prediction algorithms it is possible to predict how many standby generators need to be used, to reach a certain failure rate. In the traditional grid, the failure rate can only be reduced at the cost of more standby generators.

As with any emerging technology, security fears do exist with the amount of trust being put into storing such sensitive information in the cloud or with third party analytics companies. The never before seen benefits and capabilities that smart grids provide the planet and its inhabitants far outweigh the risks taken by using them—no matter how frequently attacks are attempted or the size of the parties behind them.