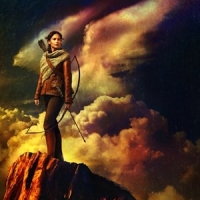

The Test Automation Hunger Games

The life of an automated test, whether it acts like it or not, is a game of survival—à la "The Hunger Games." Thrust into the arena of continuous integration, tests must constantly prove their worth and their usefulness. They must fight for their right to exist and be heard.

A red test is an eyesore—potentially a very useful eyesore that can reveal a real problem, but nonetheless a disastrous eyesore that can wreck productivity like a small leak can sink a great ship.

Many teams have experienced this exact form of tester’s Kryptonite. Tests are easily automated using tools that make writing tests easy, and a suite of hundreds of tests accumulates. Some tests begin to fail due to real bugs, perhaps, and brittle test design, and the testers try to investigate what’s happening only to find that nailing down the problem is difficult and time consuming because the tests—despite having been so easy to write—are inscrutable at a glance.

The fast pace of agile development means fixing the tests becomes virtually impossible, despite the testers’ best intentions, and the red blazes a path across more and more tests. The testers then ignore the failing tests, because they are intimidated by the indomitable mass of failures. The tests are rendered impotent and weak. Issued their death sentence in the automation hunger games, the tests are then scrapped in exchange for developing anew with a different tool.

What is it that separates tests that survive from those that drown in pools of red pixels? Strong tests minimize pain points and time waste. They are human-readable, easily understood by simply looking at them—no need for reverse engineering. They fight for their existence by communicating their purpose directly and succinctly. When these tests fail and a tester with only five minutes to spare must figure out what happened, the tester is not lambasted with questions and doubts about the purpose of the test or what it does.

Tests with sticking power do not test the same functionality over and over. Strong tests describe what is tested—not just how it is tested—making it easy to change the automation code behind the tests when the application infrastructure changes. Tests that survive decouple functionality; integration tests cover integration, not business rules.

These kinds of tests are more than just bug nets. By encouraging faster feedback and offering more transparency, they simultaneously inspire better design and process improvement, which means quality can be built in. When these tests fail, they are more likely to serve as real indicators of application health, rather than as prognosticators of testers’ burgeoning stress levels.