Software Testing and Monitoring in DevOps

In 2007, software engineer Ed Keyes delivered a talk at the Google Test Automation Conference titled “Sufficiently Advanced Monitoring is Indistinguishable from Testing.” This presentation, a riff on the famous Arthur C. Clarke law “Any sufficiently advanced technology is indistinguishable from magic,” spun off a lot of misconceptions about modern software development—namely, that software testing is avoidable, and that discovering problems in production and then fixing them later is okay.

Ten years later, software-monitoring tools are far more sophisticated than they were back then, and they are still not replacing people. Let’s take a look at why that is.

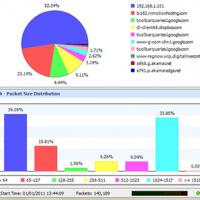

First, I’m going to sort through the big ball of mud that is monitoring. The simplest variety of monitoring tools start from a simple ping that can answer the question “Are you alive?” At the other end of the spectrum are so-called application performance monitoring tools that perform the pings, measure resource usage by servers (another term that has become complicated with the arrival of virtual machines and, now, containers), measure response time and latency, and perform complete workflow calls against APIs. All of this data gets displayed in a dashboard and reported on based on configured thresholds for each measurement.

These data collection and reporting tools are sophisticated and can be a very useful information delivery service. Developers and operations people regularly use monitoring to get a base understanding of what is happening in production and to get a feel for customer experience. But there are a lot of hidden assumptions behind monitoring for modern software development methods such as DevOps.

In most of the highly successful DevOps shops I hear about, there is a base of test-driven development, Extreme Programming and pairing, collaborative work to prepare development tasks, acceptance by product managers, and continuous integration servers and build pipelines. Each of these things, when done well, increases the ability of the developers to discover important quality-related information.

While there may not be people in a tester role on staff, each person is an expert in the part of the product they work on, the layer in the technology stack, and the tools at their disposal. For example, the microservices developer who writes unit tests and then integration tests with an API testing framework, then performs exploratory testing, is an expert in those fields. They might even be using monitoring tools in test and staging environments. This sort of development pushes a lot of the boundary testing and basic submitting of forms back on the developers.

When you hear about software companies developing products without any dedicated testers on staff, or even contractors (I’m looking at you, Yahoo), it isn’t because monitoring has replaced them. It’s because there are thriving cultures and methods that help developers better understand product quality.

Software monitoring is not replacing anyone. It is a last-ditch effort to find problems that slip through to production and reduce defect exposure when there is a long line of testing ahead of it. Most of the time, important problems will be caught before production by a person performing skilled software testing.